recap 2025 - updates at OSSO

2025 – Finding the Turbo Button

A year of momentum. After the “certification heavy” focus of 2024, 2025 felt like shifting into a higher gear. We’ve seen our infrastructure move to 100G, our hardware embrace a new generation of speed, and our team grow into a tighter, faster unit.

Looking back at the last twelve months, the theme hasn’t just been “maintenance” — it’s been about refinement. We’ve been busy cleaning out the old (goodbye spinning rust and legacy loadbalancers) to make room for the new (hello EPYC processors and Nokia core routers). It was a year where the “young guard” stepped up to become veterans, and the office itself got a glow-up — both in terms of lighting and team spirit.

Here is the story of our 2025:

-

The Infrastructure Level-Up — Moving from 10G to 100G, the Nokia migration, IPv6, Faster Machines, more GPUs, Bigger Disks. Purging the Legacy.

-

Tooling & Security — How Change Management became our second nature and the custom tools (Auditif, Suave) that keep us sane.

-

The Open Source Ecosystem — Some notes about Open Source projects that were (not) relevant this year.

-

The Human Element — Welcoming Damian, celebrating the return of Rona, the “Great House Hunt” that gripped the office and WHY2025.

-

OSSO HQ 2.0: Let There Be Light — The office also got level-upped with fancy lighting options.

We strive to get a lot done. And when we do something, we want to do it right. Because we refused to settle for a half-baked celebration, we’ve decided to postpone the 20-year OSSO anniversary. Don’t worry. It’s not scrapped, just delayed.

The Infrastructure Level-Up

A year goes by fast. But we cleared a massive amount of technical debt.

Out with the old

We had delayed the migration from Ubuntu/Jammy to Ubuntu/Noble because of various unresolved bugs. It wasn’t until the second half of 2025 that we began migrating most of our machines. While the process is ongoing, we’re already looking ahead to a swifter move to Ubuntu/Resolute (26.04).

What we had also delayed, was the migration from the “old” load balancers. The new ones had been running since 2020, but migration had stalled because of various legacy “customizations” that were non-trivial to port. The new machines were faster, have a more modern config and have since gotten additional security. So, it was really great that Seppe picked it up and drove this migration project to the end. And in this final month of the year, we finally got to shut the old machines down. Nice!

This old hardware will end up on our hardware decommissioning pile. But, where some of you who have visited our office in the past will have come to expect a big graveyard of old hardware in the corner of the office; that tower is now gone. Hardware that no longer met our performance or energy-efficiency standards — but still had plenty of life left — has been sent to Ukraine to support their effort against the aggressor. And the rest? Picked up for parts. Our office corner is finally clear — clean, clean, clean.

We continue to update all our managed Kubernetes clusters, both Kubernetes itself and the core components on it, which we’ve dubbed subops.

We recently realized we have an eight-year-old in the house. An eight-year-old Kubernetes cluster, that is. Despite its age, it’s still healthy, maintained, and keeping up with the youngsters.

More consistent logging and monitoring

Another one of those lingering tasks was standardized monitoring for certain applications. As always, we need to prioritize; so not everything had gotten the attention we’d have liked.

Again, the fresh blood got to work and added and refined various zabbix-agent-osso metrics and triggers: improved load balancer monitoring, postgres/citus/patroni, monitoring for MinIO.

Meanwhile, Grafana access has been rolled out to more of you. We’ve been aggregating logs for quite a while, but the platform is now stable, polished, and ready for you to dive in.

Enhancing the networks

closso-network, our 3 spine-leaf network — where your managed Kubernetes lives — has had 100G links for over 5 years to facilitate the internal traffic. osso.network, that facilitates the external traffic, got its upgrade this year, with new Nokia routers, 100G IP transit and 100G between all POPs, including a direct 100G link to Amsterdam for each Groningen zone.

We aren’t currently utilizing all that capacity, but it’s nice to have some leeway; especially so we can handle bursts of (malicious) traffic.

With the help of 2Hip, the osso.network core is migrated to Nokia 7750 SR-1 routers. As a nice bonus, we directly connected the Mediacentrale with our WP and ZL zones on 100G, providing a redundant 100G path all the way to the our uplinks in Amsterdam. Thus ensuring OSSO HQ and other tenants can Slack/mail/surf/whatever with minimal latency.

For closso.network we are adding more 100G links between zones, nearing a full mesh between spines. And now we’re beginning preparations to expand this to 400G to facilitate 100G links to individual nodes.

IPv6 on closso.network

Ah, IPv6 — the world’s most famous “neglected” protocol. Emilia took the lead this year, rolling out native support across our closso.network infrastructure. This means we can now assign IPv6 addresses to VMs and the load balancers directly.

For those of you who need that 100% internet.nl rating, you know where to find us. =)

While we were adding new IP space, we also took the opportunity to prune old networks and legacy assignments, ensuring the entire stack is future-proof.

Drives, drives, drives, and MinIO

This year marks the end of spinners. Aside from massive big-data applications, the cost-savings of spinning metal are no longer worth the performance trade-off. From here on out, it’s NVMe all the way.

For us, this was also the year of big data. The one place where we still do rotating metal.

We’ve been using MinIO as an S3-compatible object store since last year. And this year, we added a few big clusters for one of our customers.

We managed several petabytes of raw storage for their large-scale project, but the data was flowing in so fast that adding new pools became a constant race to stay ahead of the capacity curve.

Because of changed requirements we didn’t have the right setup from the start, so we had to change mid-project. And then you find out that decommissioning many bytes – to rebalance/change disks/parity – takes a loooong time.

We’ve been leveling up our MinIO CLI skills,

as well as browsing the source to find the hidden _MINIO_DECOMMISSION_WORKERS setting, which finally gave us the speed we needed to beat the clock. 😅

GPUs in the cluster

As you might imagine, in the current zeitgeist you need to have at least some parts of your operation doing AI. While we remain pragmatic about the AI hype, ignoring the shift in technology would be a disservice to our clients.

So, we have been testing GPUs and evaluating Kubernetes operators, mainly HAMi. Some tests with vLLM have been performed.

(Testing at OSSO HQ was not appreciated by everyone. These enterprise GPUs are NOISY!)

For those that need it, we’ve now begun deploying dedicated GPUs within Kubernetes clusters.

New worker generations

There’s a new generation of our Kubernetes worker nodes. They’re upgraded to EPYC 4584PX (16 cores with “3D V-Cache”), DDR5 ECC UDIMM, PCI-e v4 NVME storage — delivering nice results with existing environments.

Check this graph:

The results speak for themselves. This graph shows the moment the new nodes took over — tell me that isn’t a juicy upgrade.

Stability

The move fast and break things mantra isn’t what you’re used from OSSO, and we are glad to say we’ve kept the outages to a minimum. (Even though we did come close a few times due to the Turbo Button.)

There was the issue with a leaf switch that caused about two hours of downtime for certain services. Many of you will not have noticed anything, some others had severe degradation during that period, if your single instance (virtual) machines were connected there.

This outage did spur immediate action, prompting us to provide dual links to (almost) all hypervisors. Next time a switch goes dark, I hope to be able to write that no one noticed anything.

The upgrades of various MariaDB clusters that we had done previous years were likely the reason that we saw far fewer incidents of MariaDB corruption this year. And the fact that many are moving new workloads to PostgreSQL, where corruption is even more rare, also helps. We did battle with one severe case of corruption where Citus shards the PostgreSQL databases and all disks were full. That was not fun. And we’ll definitely make sure that doesn’t happen again.

Technical stability is only one side of the coin; the other is the human process that prevents mishaps from reaching production in the first place.

Tooling & Security

An anecdote: during a recent audit for one of our healthcare clients, we received what might be the ultimate professional validation: the auditors noted they rarely see hosting providers who “have their shit together” quite like we do.

(Schouderklopje voor onszelf.)

From change management and inventory control to risk mitigation and system hardening, we received a resounding thumbs up across the board. However, the real proof was in the follow-up: after granting us the official stamp of approval, they mentioned they had already started recommending us to potential new clients.

If the auditor is doing your sales, then that’s a compliment.

Change Management

In 2024, when we started implementing Change Management for our services with the highest security category, it still felt like a chore: documenting what you’re about to do and why.

Today, we can luckily say that the entire team has fully embraced the workflow. Yes, it slows some “quick” changes down a bit. But the accountability and explainability is worth so much. We no longer have to dig through old Slack threads or timesheets to figure out who tweaked a setting ten months ago or why — the change ticket holds the answer.

Audit tooling

We’ve been working on refining our tooling around audit and access logs. Our custom suite of tools (natsomatch, subnact, auditif, trumpet and suave) works together to provide a nice audit trail.

Auditif — started as Seppe’s graduation project — aggregates data from across our infrastructure to maintain a comprehensive trail of host activity. It keeps track of valid logins on hosts, on VPN servers, user data, change ticket history. By integrating with Netbox and Kleides, it gathers the context necessary to automatically validate security events — cross-referencing login keys and ticket statuses in real time. Any discrepancies trigger immediate alerts, allowing the team to investigate potential issues the moment they occur.

Naturally this isn’t foolproof yet and we’re still dealing with false positives. But we’re getting there.

CVE Management with a nice audit trail is still a pesky one. But, we’re now aiming to get this in good shape in 2026.

Rollout of cat3 extra security

Over the past few months, we have transitioned our customers who handle sensitive personally identifiable information (PII) — particularly in the healthcare sector — to our most hardened environments.

We’re taking the good stuff that we were mandated to implement for our category 4 environment and deploying that to our category 3 customers. This is an on-going process, where we’re gradually implementing more and more of the extra hardening and auditing. For those in this category, this transition introduces a few necessary guardrails: changes may require more documentation (more red tape), and network policies are strictly enforced. It’s a bit more work, but the resulting peace of mind is worth it.

On our security page you can find an up-to-date overview of our certification state. The rest of the website will get some additional love in 2026 to better reflect what we’ve been working on the last couple of years.

While much of our security focus is internal, we remain deeply committed to the wider ecosystem that makes our work possible.

The Open Source Ecosystem

SONiC

Last year, part of the team was in Germany, doing a training course on SONiC, which might replace our Cumulus switch infrastructure at some point. Unfortunately, the bug reports we filed at the end of 2024 have not been resolved. Our code fixes have been approved, but they have not been merged. We hope that they too get some turbo in 2026, so we can pursue the switch replacement project again.

Sponsoring Alpine

Alpine Linux, the project behind the Alpine Linux distribution, recently sent out a letter asking for hosting support.

This community driven independent Linux distribution, which focuses on simplicity, security and resource efficiency is best known for its use in small Docker images. They had moderate demands, and we were more than happy to sponsor hardware and bandwidth.

OSSO tooling

- In our ossobv GitHub repositories you can find various tools that might be useful. Many of the existing projects and tools have gotten minor updates.

- The vcutil project got a README to promote individual tools. Look around, maybe there is a useful CLI tool you’ve always been missing.

- We created ipgrep — a blazing fast utility for searching IPv4 and IPv6 networks. Instead of regular expressions, it uses CIDRs to match. When constructing or auditing route tables, ACLs and firewalls, this tool shines.

MinIO

Above, we mentioned that we’ve been doing lots of data in MinIO. MinIO looked like it was going to be the promising multi-node, sharded high availability object storage we needed.

However, they have dropped the ball and chosen to withdraw from the open source community.

At OSSO we don’t mind paying for development. But we do mind if you’re closing your Open Source project, effectively stealing and nullifying the volunteer hours of Open Source contributors.

At this point we don’t know where MinIO is headed yet. Maybe they’ll open up again. Maybe they get forked. Or maybe we’ll need to look for a replacement; possibly something like radosGW, an object-store solution provided by Ceph. After all, we’re already widely using Ceph for block (RBD) and file (CephFS) storage.

While software projects can be unpredictable, the people behind the keyboards are what keep OSSO moving forward.

The Human Element

Fortunately, the OSSO crew has seen plenty of growth and change this year — both inside the office and in their personal lives.

Team

We are thrilled to welcome Damian — our resident 3D-printing deity — to the operations team. He’s impressively tall — so much so that even Herman has to look up when talking to him.

Damian’s hobbies include his homelab, model trains, and games. His first tasks have been cleaning up the mess that our Dostno testing environment had become.

He can still be a bit shy, but this will wear off soon enough. He’s currently being integrated into the core ops team, so expect to see him in a Slack channel near you very soon.

Rona is back from maternity leave. We are happy to report that the newest member of the extended OSSO family is thriving and Rona will start to come in more regularly.

Good stuff!

Emilia has been leveling up. Last year, she was the trainee, today she’s one of our on-call heroes and proper network engineer. When she’s not at the office, you might find her at the NOC at your nearest network or hacker conference.

It wasn’t all highlights; one of our small team faced significant personal loss this year, which understandably had an impact. We acknowledge this with respect. ❤️ 😢

The Great House Hunt

Half the team spent their lunch breaks on Funda this year.

Buying a house is famously impossible in the Netherlands these days.

This makes it all the more impressive that, Harm, Robin and Seppe all completed their quest for a new abode in the same timespan of two months.

Congrats!

WHY2025

In 2025, most of the team brought themselves and their families to WHY2025, the Dutch hacker camp near Amsterdam.

We attended sessions ranging from deep-dives into 0-day CPU vulnerabilities to whimsical talks on bit-flipping attacks.

We also made sure to take excellent care of our inner selves with some top-tier outdoor cooking.

There were various activities for both kids and adults. And lights everywhere.

Unfortunately, even the outdoors offer no escape from nightly incidents. The 24/7 life continues, even under a tent.

Definitely voor herhaling vatbaar. Maybe EMF next?

The glowing lights of WHY2025 must have left an impression, because when we got back to Groningen, we decided our own office could use a bit more atmosphere (and a lot more automation).

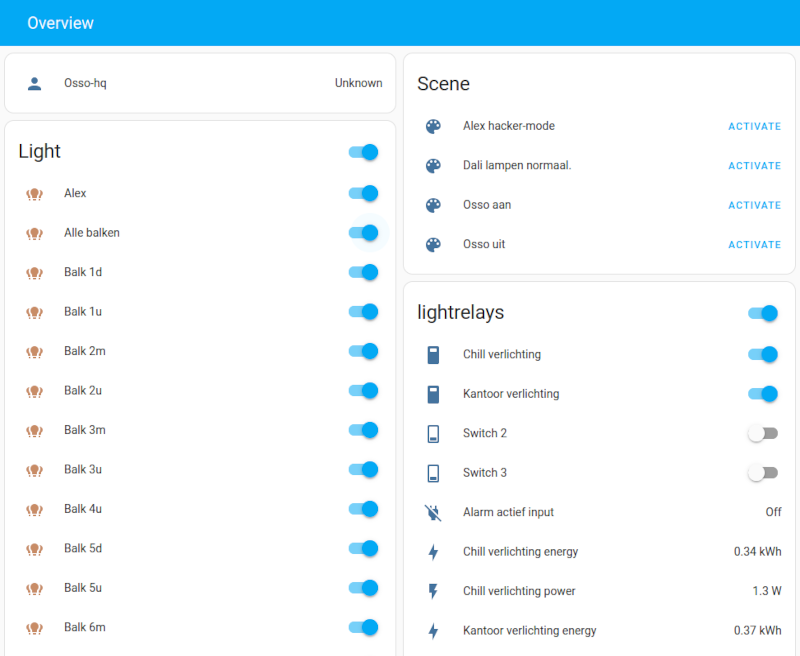

OSSO HQ 2.0: Let There Be Light

During the fall of 2025, we had a second Herman running around the office — a super nice bloke with a serious passion for domotics

This Herman2, a regular at the Groningen Maakplek, has been busy fixing our office lighting. He has been installing hundreds of individually addressable LEDs and spotlights, and integrating those with our existing systems. This groundwork allows us to create next level Christmas lights, and whatever else we have time for in the future.

During a power outage, our old system broke down. It turned out to be a happy accident. Not only did it speed up the transition to the new system, but we also gained a Big Red override button for those rare moments when the automation decides to take a nap.

Thanks Herman2!

Looking Forward to 2026

Is it 20 years of OSSO, or are we already hitting 21? Either way, it’s a milestone worth celebrating.

Good times!

We hope to welcome you to our HQ soon. And as always, see you around in Slack!